An Intuitive Introduction to Data Structures

Version 2.0

© 2019 Brian Heinold

Licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 Unported License

Links

- A pdf version of the book

- Text files needed for some of the examples and exercises

- Complete listings of some of the classes

- Version 1.0 of the book

Preface

This book covers standard topics in data structures including running time analysis, dynamic arrays, linked lists, stacks, queues, recursion, binary trees, binary search trees, heaps, hashing, sets, maps, graphs, and sorting. It is based on Data Structures and Algorithms classes I've taught over the years. The first time I taught the course, I used a bunch of data structures and introductory programming books as reference, and while I liked parts of all the books, none of them approached things quite in the way I wanted, so I decided to write my own book. I originally wrote this book in 2012. This version is substantially revised and reorganized from that earlier version. My approach to topics is a lot more intuitive than it is formal. If you are looking for a formal approach, there are many books out there that take that approach.

I've tried to keep explanations short and to the point. When I was learning this material, I found that just reading about a data structure wasn't helping the material to stick, so I would instead try to implement the data structure myself. By doing that, I was really able to get a sense for how things work. This book contains many implementations of data structures, but it is recommended that you either try to implement the data structures yourself or work along with the examples in the book.

There are a few hundred exercises. They are all grouped together in Chapter 12. It is highly recommended that you do some of the exercises in order to become comfortable with the data structures and to get better at programming.

If you spot any errors, please send me a note at heinold@msmary.edu

Running times of algorithms

Introduction

In computer science, a useful skill is to be able to look at an algorithm and predict roughly how fast it will run. Choosing the right algorithm for the job can make the difference between having something that takes a few seconds to run versus something that takes days or weeks. As an example, here are three different ways to sum up the integers from 1 to n.

- Probably the most obvious way would be to loop from 1 to n and use a variable to keep a running total.

long total = 0; for (long i=1; i<=n; i++) total += i; - If you know enough math, there is a formula 1+2+… +n =n(n+1)/2. So the sum could be done in one line, like below:

long total = n*(n+1)/2;

- Here is a bit of a contrived approach using nested loops.

long total = 0; for (long i=1; i<=n; i++) for (long j=0; j<i; j++) total++;

Any of these algorithms would work fine if we are just adding up numbers from 1 to 100. But if we are adding up numbers from 1 to a billion, then the choice of algorithm would really matter. On my system, I tested out adding integers from 1 to a billion. The first algorithm took about 1 second to add up everything. The next algorithm returned the answer almost instantly. I estimate the last algorithm would take around 12 years to finish, based on its progress over the three minutes I ran it.

In the terminology of computer science, the first algorithm is an O(n) algorithm, the second is an O(1) algorithm, and the third is an O(n2) algorithm.

This notation, called big O notation, is used to measure the running time of algorithms. Big O notation doesn't give the exact running time, but rather it's an order of magnitude estimation. Measuring the exact running time of an algorithm isn't practical as so many different things like processor speed, amount of memory, what else is running on the machine, the version of the programming language, etc. can affect the running time. So instead, we use big O notation, which measures how an algorithm's running time grows as some parameter n grows. In the example above, n is the integer we are summing up to, but in other cases it might be the size of an array or list, the number of items in a matrix, etc.

With big O, we usually only care about the dominant or most important term. So something like O(n2+n+1) is the same as O(n2), as n and 1 are small in comparison to n2 as n grows. Also, we usually ignore constants. So O(3.47n) is the same as O(n). And if we have something like O(2n+4n3+.6n2-1), we would just write that as O(n3). Remember that big O is just an order of magnitude estimation, and we don't often care about being exact.

Estimating the running times of algorithms

To estimate the big O running time of an algorithm, here are a couple of rules of thumb:

- If an algorithm runs in the same amount of time regardless of how large n is, then it is O(1).

- A loop that runs n times will contributes a factor of n to the big O running time.

- If loops are nested, multiply their running times.

- If one loop follows another, add their running times.

- If the loop variable is increasing in a way such as

i=i*2ori=i/3, instead of changing by a constant amount (likei++ori-=2), then that contributes a factor of log n to the big O running time.

Here are several examples of how to compute the big O running time of some code segments. All of these involve working with an array, and we will assume n is the length of the array.

- Here is code that sums up the entries in an array:

int total = 0; for (int i=0; i<a.length; i++) total += a[i];This code runs in O(n) time. It is a pretty ordinary loop.

- Here is some code involving nested for loops:

for (int i=0; i<a.length; i++) for (int j=0; j<a.length; j++) System.out.println(a[i]+a[j]);These are two pretty ordinary loops, each running for n=

a.lengthsteps. They are nested, so their running times multiply, and overall the running time is O(n2). For each of the n times the outer loop runs, the inner loop has to also run n times, which is where the n2 comes from. - Here are three nested loops:

for (int i=0; i<a.length; i++) for (int j=0; j<a.length-1; j++) for (int k=0; k<a.length-2; k++) System.out.println(a[i]+a[j]*a[k]);This code runs in O(n3) time. Technically, since the second and third loops don't run the full length of the array, we could write it as O(n(n-1)(n-2)), but remember that we are only interested in the most important term, which is n3, as n(n-1)(n-2) can be written as n3 plus a few smaller terms.

- Here are two loops, one after another:

int count=0, count2=0; for (int i=0; i<a.length; i++) count++; for (int i=0; i<a.length; i++) count2+=2;The running time here is O(n+n)=O(2n), which we simplify to O(n) because we ignore constants.

- Here are a few simple lines of code:

int w = a[0]; int z = a[a.length-1]; System.out.println(w + z);

The running time here is O(1). The key idea is that the running time has no dependence on n. No matter how large that array is, this code will always take the same amount of time to run.

- Here is some code with a loop:

int c = 0; int stop = 100; for (int i=0; i<stop; i++) c += a[i];Despite the loop, this code runs in O(1) time. The loop always runs to 100, regardless of how large the array is. Notice that

a.lengthnever appears in the code. - Here is a different kind of loop:

int sum = 0; for (int i=1; i<a.length; i *= 2) sum += a[i];This code runs in O(log n) time. The reason is the that the loop variable is increasing via

i *= 2. It goes up as 1, 2, 4, 8, 16, 32, 64, 128, …. It takes only 10 steps to get to 1000, 20 steps to get to 1 million, and 30 steps to get to 1 billion.The number of steps to get to

nis gotten by solving 2x = n, and the solution to that is log2(n) (which we often write just as log(n)).**For logs, there is a formula, called the change-of-base-formula, that says loga(x)=logb(x)/logb(a). In particular, all logs are constant multiples of each other, and with big O, we don't care about constants. So we can just write O(log n) and say that the running time is logarithmic. - Here is a set of nested loops:

n = a.length; for (int i=0; i<n; i++) { sum = 0; int m = n; while (m > 1) { sum += m; m /= 2; } }This code has running time O(n log n). The outer loop is a pretty ordinary one and contributes the factor of n to the running time. The inner loop is a logarithmic one as the loop variable is cut in half at each stage. Since the loops are nested, we multiply their running times to get O(n log n).

- Here is some code with several loops:

total = 0; int i=0; while (i<a.length) { i++; total += a[i]; } for (int i=0; i<a.length/2; i++) for (int j=a.length-1; j>=0 j--) total += a[i]*a[j];The running time is O(n2). To get this, start with the fact that the while loop runs in O(n) time. The nested loops' running times multiply to give O(n · n/2). The while loop and the nested loops follow one another, so we add their running times to get O(n+n · n/2). But remember that we only care about the dominant term, and we drop constants, so the end result is O(n2).

Common running times

The most common running times are probably O(1), O(log n), O(n), O(n log n), O(n2), and O(2n). These are listed in order of desirability. An O(1) algorithm is usually the most preferable, while an O(2n) algorithm should be avoided if at all possible.

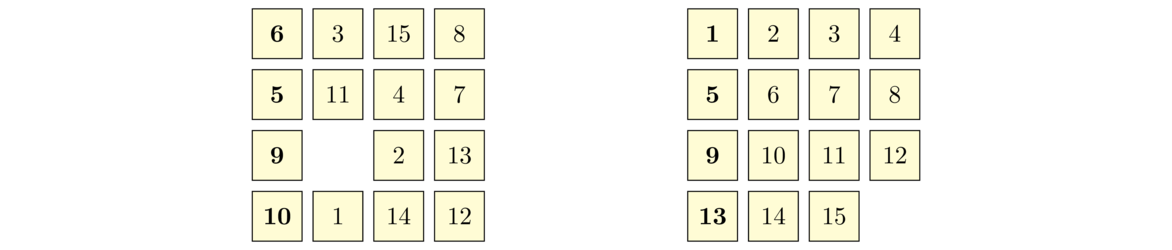

To get a good feel for the functions, it helps to compare them side by side. The table below compares the values of several common functions for varying values of n.

| 1 | 10 | 100 | 1000 | 10000 | |

| 1 | 1 | 1 | 1 | 1 | 1 |

| log n | 0 | 3.3 | 6.6 | 10.0 | 13.3 |

| n | 1 | 10 | 100 | 1000 | 10,000 |

| n log n | 0 | 33 | 664 | 9966 | 132,877 |

| n2 | 1 | 100 | 10,000 | 1,000,000 | 100,000,000 |

| 2n | 2 | 1024 | 1.2× 1030 | 1.1× 10301 | 2.0× 103010 |

Things to note:

- The first line remains constant. This is why O(1) algorithms are usually preferable. No matter how large the input gets, the running time stays the same.

- Logarithmic growth is extremely slow. An O(log n) algorithm is often nearly as good as an O(1) algorithm. Each increase by a factor of 10 in n only corresponds to a growth of about 3.3 in the logarithm. Even at the incredibly large value n=10100 (1 followed by 100 zeros), log n is only about 332.

- Linear growth (O(n)) is what we are most familiar with from real life. If we double the input size, we double the running time. If we increase the input size by a factor of 10, the running time also increases by a factor of 10. This kind of growth is often manageable. A lot of important algorithms can't be done in O(1) or O(log n) time, so it's nice to be able to find a linear algorithm in those cases.

- The next line, for n log n, is sometimes called loglinear or linarithmic growth. It is worse than O(n), but not all that much worse. The most common occurrence of this kind of growth is in the running times of the best sorting algorithms.

- With quadratic growth (O(n2)), things start to go off the rails a bit. We can see already with n=10,000 that n2 is 100 million. Whereas with linear growth, doubling the input size doubles the running time, with quadratic growth, doubling the input size corresponds to increasing the running time by 4 times (since 4=22). Increasing the input size by a factor of 10 corresponds to increasing the running time by 102=100 times. Some problems by their very nature are O(n2), like adding up the elements in a 2-dimensional array. But in other cases, with some thought, an O(n2) algorithm can be replaced with an O(n) algorithm. If it's possible that the n you're dealing with could get large, it is worth the time to try to find a replacement for an O(n2) algorithm.

- The last line is exponential growth, O(2n). Exponential growth gets out of hand extremely quickly. None of the examples we saw earlier had this running time, but there are a number of important practical optimization problems whose best known running times are exponential.

- Other running times — There are infinitely many other running times, like O(n3), O(n4), …, or O(n1.5), O(n!), and O(22n). The ones listed above are just the most common.

Some notes about big O notation

Here are a few important notes about big O notation.

- When we measure running times of algorithms, we can consider the best-case, worst-case, and average-case performance. For instance, let's say we are searching an array element-by-element to find the location of a particular item. The best case would be if the item we are looking for is the first thing in the list. That's an O(1) running time. The worst case is if the element is the last thing, corresponding to a O(n) running time. The average case is that we will need to search around half of the elements before finding the element, corresponding to a O(n/2) running time, which we would write as O(n), since we ignore constants with big O notation. Of the three, usually what we will refer to when looking at the big O of an algorithm is the worst case running time. Best case is interesting, but not often all that useful. Average case is probably the most useful, but often it is harder to find than the worst case, and often it turns out to have the same big O as the worst case.

- We have mostly ignored constants, referring to values such as 3n, 4n, and 5n all as O(n). Often this is fine, but in a lot of practical scenarios this won't give the whole story. For instance, consider algorithm A that has an exact running time of 10000n seconds and algorithm B that has an exact running time of .001n2 seconds. Then A is O(n) and B is O(n2). Suppose for whatever problem these algorithms are used to solve that n tends to be around 100. Then algorithm A will take around 1,000,000 seconds to run, while algorithm B will take 10 seconds. So the quadratic algorithm is clearly better here. However, once n gets sufficiently large (in this case around 10 million), then algorithm A begins to be better. An O(n) algorithm will always eventually have a faster running time than any O(n2) algorithm, but n might have to be very large before that happens. And that value of n might turn out larger than anything that comes up in real situations. Big O notation is sometimes called the asymptotic running time, in that it's sort of what you get as you let n tend toward infinity, like you would to find an asymptote. Often this is a useful measure of running time, but sometimes it isn't.

- Big O is used for more than just running times. It is also used to measure how much space or memory an algorithm requires. For example, one way to reverse an array is to create a new array, run through the original in reverse order, and fill up the new array. This uses O(n) space, as we need to create a new array of the same size as the original. Another way to reverse an array consists of swapping the first and last elements, the second and second-to-last elements, etc. This requires only one additional variable that is used in the swapping process. So this algorithm uses O(1) space. There is often a tradeoff where you can speed up an algorithm by using more space or save space at the cost of slowing down the algorithm.

- Big O notation sometimes can have multiple parameters. For instance, if we are searching for a substring of length m inside a string of length n, we might talk about an algorithm that has running time O(n+m).

- Last, but not least, we have been using big O notation somewhat incorrectly. Instead we really should be using something called big theta, as in Θ(n) instead of O(n). In formal computer science, big O is sort of a “no worse than” running time. That is, O(1) and O(n) algorithms are technically also O(n2) algorithms because a running time of 1 or n is no worse than n2. Big O is sort of like a “less than or equal to”, while big theta is used for “exactly equal to”. However, most people are not really careful about this and use big O when technically they should use big theta. We will do the same thing here. If you are around someone pedantic or if you take a more advanced course in algorithms, then you might want to use big theta, but otherwise big O is fine.

Logarithms and Binary Search

Here is a common way of searching through an array to determine if it contains a particular item:

public static boolean linearSearch(int[] a, int item) {

for (int i=0; i<a.length; i++)

if (a[i]==item)

return true;

return false;

}

This is probably the most straightforward way of searching—check the first item, then the second item, etc. It is called a linear search, and its running time is O(n). However, this is not the most efficient type of search. If the data in the array is in order, then there is an O(log n) algorithm called the binary search that can be used.

To understand how it works, imagine a guess-a-number game. A person picks a random number from 1 to 1000 and you have 10 guesses to get it right. After each guess, you are told if your guess is too high or too low. Your first guess should be 500, as whether it's too high or too low, you will immediately have eliminated 500 numbers from consideration, which is the highest amount you can guarantee removing. Now let's suppose that 500 turns out to be too low. Then you know that the number is between 500 and 1000, so your next guess should be halfway between them, at 750. Suppose 750 turns out to be too high. Then you know the number is between 500 and 750, so your next guess should be 625, halfway between again.

At each step, the number of possibilities is cut in half from 1000 to 500 to 250, and so on, and after 10 of these halvings, there is only one possibility left. A binary search of an array is essentially this process. We look at the middle item of the array and compare it with the thing we are looking for. Either we get really lucky and find it, or else that middle element is either larger or smaller than what we are looking for. If it is larger, then we know to check the first half of the array, and otherwise we check the second half. Then we repeat the process just like with the guess-a-number game, until we either find the element or run out of places to look. In general, if there are n items, then no more than about log2(n) guesses will be needed, making this an O(log n) algorithm. Here is the code for it:

public static boolean binarySearch(int[] a, int item) {

int start=0, end=a.length-1;

while(end >= start) {

int mid = (start + end) / 2;

if (a[mid] == item)

return true;

if (a[mid] > item)

end = mid - 1;

else

start = mid + 1;

}

return false;

}

Inside the loop, the code first locates the middle index** A better approach is to use start + ((end - start) / 2) which avoids a potential overflow problem, but for clarity, we have taken the simpler approach. We then compare the middle item to the one we are looking for and adjust the start or end variables, which has the effect of focusing our attention on one half or the other of the array.

If possible, a binary search should be used over a linear search. For instance, a linear search on a list of 10 billion items will involve 10 billion checks if the item is not in the list, while a binary search will only require log2(10 billion})= 34 checks.

However, binary search does require the data to be sorted, and the initial sort will take longer than a single linear search. So a linear search would make sense if you are only doing one search, and it would also make sense if the items in the array are of a data type that can't be sorted. A linear search would also be fine for very small arrays.

Lists

Dynamic arrays

Throughout these notes, we will look at several data structures. For each one we will show how to implement it from scratch so that we can get a sense for how it works. For many of these data structures, we will also talk about how to use the versions of them that are built in to Java. Generally, the classes we build here are for demonstration purposes only. They are so we can learn the internals of these data structures. It is recommended to use the ones built in to Java whenever possible as Java's classes have been much more carefully designed and tested.

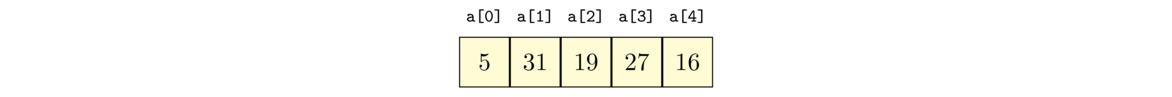

The first data structure we will talk about is the list. A list is a collection of objects, such as [5, 31, 19, 27, 16], where order matters and there may be repeated elements. We will look at two ways to implement lists: dynamic arrays and linked lists.

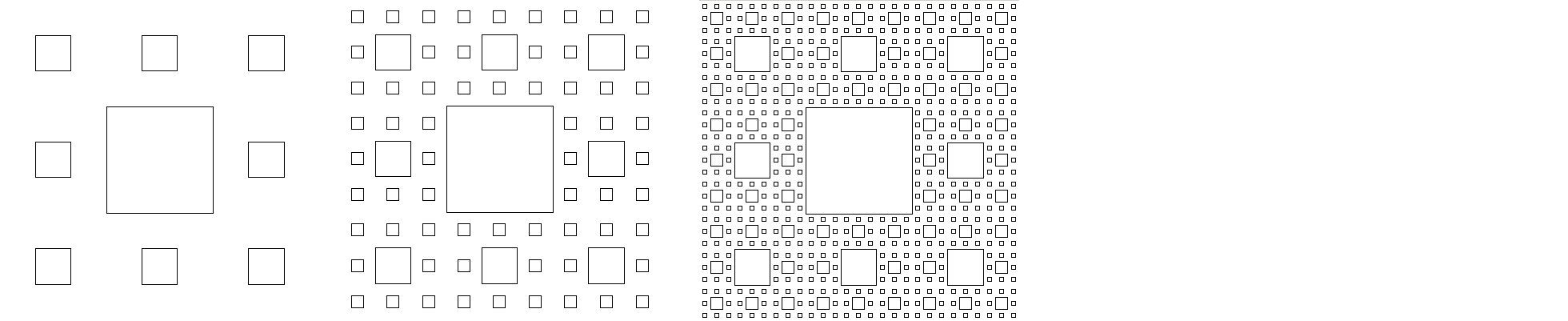

Let's start out with looking at an ordinary Java array. An array is data stored in a contiguous chunk of memory, like in the figure below.

Each cell in the array has the same data type, meaning it takes up the same amount of space. This makes it quick to access any given location in the array. For instance, to access the element at index 5 in an array a in Java, we use a[5]. Internally, Java finds that location via a simple computation, which takes the starting memory location of the array and adds to it 5 times the size of the array's data type. In short, getting and setting elements in an array is a very fast O(1) operation.

The main limitation of Java's arrays is that they are fixed in size. Often we don't know up front how much space we will need. We can always just create our arrays with a million elements apiece, but that's wasteful. This brings us to the concept of dynamic arrays. A dynamic array is an array that can grow as needed to accommodate new elements. We can create this data structure using an ordinary array. We can start the array small, maybe making it 10 items long. Once we use up those 10 spots in the array, we create a new array and copy over all the stuff from the old array to the new one. Java will automatically free up the space in that old array to be used elsewhere in a process called garbage collection.

One question is how big we should make the new array. If we make it too small, then the expensive operation of copying the old array over will have to be done too often. A simple and reasonable thing to do is to make the new array double the size of the old one.

Implementing a dynamic array

Here is the start of a dynamic array class:

public class AList {

private int[] data;

private int numElements;

private int capacity;

public AList() {

numElements = 0;

capacity = 10;

data = new int[capacity];

}

}

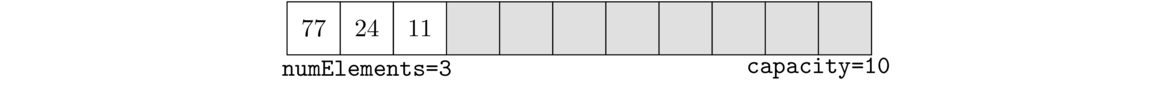

We have an array to hold the data, a variable called numElements thats keeps track of how many data values are in the list, and a variable called capacity that is the size of the data array. Once numElements reaches capacity, we will run out of room in the current array.

For instance, consider the list [77, 24, 11]. It has three elements, so numElements is 3. The capacity in the figure is 10.**Note that capacity is always the same as data.length, so we could just use that and not have a capacity variable at all. However, I think it's a little clearer to have a separate variable.

Adding elements

To add an element, there are a few things to do. First, we need to check if the array is at capacity. If so, then we need to enlarge it. We do that by calling a separate enlarge method. In terms of actually adding the element to the array, we use the numElements variable to tell us where in the data array to add the element. Specifically, it should go right after the last data value. We also need to increase numElements by 1 after adding the new item. Here is the code:

public void add(int item) {

if (numElements >= capacity)

enlarge();

data[numElements] = item;

numElements++;

}

Below is the code that enlarges the array as needed. What we do is create a new array that is twice the size of the current one, then copy over all data from the old array into this one. After that, we update the capacity variable and point the data variable to our new array.

private void enlarge() {

int[] copy = new int[capacity*2];

for (int i=0; i<capacity; i++)

copy[i] = data[i];

capacity *= 2;

data = copy;

}

Notice the design decision to make enlarge a private method. This is because it is part of the internal plumbing that our class uses to make things work, but it's not something that users of our class should need to see or use.**In a real situation, maybe there could be a few cases where users might want to be able to enlarge the array themselves, though likely 99.9% of users would not ever use that feature, and some may accidentally misuse it.

Displaying the contents of the dynamic array

We now have a working dynamic array, but we don't have any way yet to see what is in it. To do that, we will write a toString method. The toString method is a special method that is designed to work with Java's printing methods. So if we create an AList object called list, then System.out.println(list) will automatically call our toString method to get a string representation of our list to print. Here is the method:

@Override

public String toString() {

if (numElements == 0)

return "[]";

String s = "[";

for (int i=0; i<numElements-1; i++)

s += data[i] + ", ";

s += data[numElements-1] + "]";

return s;

}

It returns the items in the list with square brackets around them and commas between them. The code builds up a string one item at a time.**It would be more efficient to use Java's StringBuilder class for this, but to keep things simple we won't do that. In order to avoid a comma being generated after the last element, we stop the loop one short of the end, and add the last element in separately. Note also the special case for an empty list.

Here is some code to test out our class. We first add a couple of elements and print out the list, and then we add enough elements to force the array to have to be enlarged, to make sure that process works.

public static void main(String[] args) {

AList list = new AList();

list.add(2);

list.add(4);

System.out.println(list);

for (int i=0; i<10; i++)

list.add(i);

System.out.println(list);

}

When testing out a class like this, be sure also test out all the various edge cases you can think of. For example, if testing out a method that inserts items into a list, edge cases would be inserting at the start or end of a list or inserting into an empty list. In general, try to think of as many interesting scenarios that can give your code trouble. The extra time spent testing now can save a lot more time in the future tracking down bugs.

More methods

To make our list class more useful, there are a few other methods we might add. Some of these methods are also good for programming practice, as they demonstrate useful techniques for working with lists and arrays.

public int get(int index) {

return data[index];

}

public void set(int index, int value) {

data[index] = value;

}

In both cases, we really should do some error-checking to make sure the index chosen is valid. Both methods should have code like the following:

if (index < 0 || index >= numElements)

throw new RuntimeException("List index " + index + " is out of bounds.");

For the sake of simplicity, since our goal is to understand how data structures work, we will usually not do much error-checking, as real error-checking code can often become long enough to obscure how the data structure works.

public int size() {

return numElements;

}

Lists often have a separate method called isEmpty that tells if the list has anything in it or not. We can do that by checking if numElements is 0 or not. A standard way to do that would involve four lines of code with an if/else statement. However, there is a slick way to do this in one line of code, shown below.

public boolean isEmpty() {

return numElements == 0;

}

In Java, numElements==0 is a boolean expression that returns true or false. While we usually use that sort of thing inside an if statement, there is nothing stopping us from using it other places. So rather than use an if/else, we can shortcut the process by just returning the value of this expression. This is a common trick you will see when reading other people's code.

public boolean contains(int value) {

for (int i=0; i<numElements; i++)

if (data[i] == value)

return true;

return false;

}

A common mistake is to have an else statement inside the loop that returns false. The problem with this is that the code checks the first item and immediately returns false if it is not the thing being searched for. In the approach above, we only return false if the code gets all the way through the loop and hasn't found the desired element.

public void reverse() {

int[] rev = new int[capacity];

int j = 0;

for (int i=numElements-1; i>=0; i--) {

rev[j] = data[i];

j++;

}

data = rev;

}

This is a relatively straightforward approach that loops through the data array backwards and fills up a new array with those elements and then replaces the old data array with the new one. Note that we use a separate index variable j to advance us through the new array. Here is another approach that avoids creating a new array:

public void reverse2() {

for (int i=0; i<numElements/2; i++) {

int hold = data[i];

data[i] = data[numElements-1-i];

data[numElements-1-i] = hold;

}

}

The way this approach works is it swaps the first and last element, then it swaps the second and second-to-last elements, etc. It stops right at the middle of the list.

Note: A common mistake in writing these and other methods is to loop to capacity instead of numElements. The array's data values end at index numElements-1. Everything beyond there is essentially garbage.

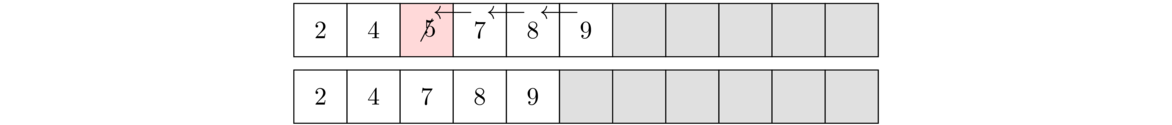

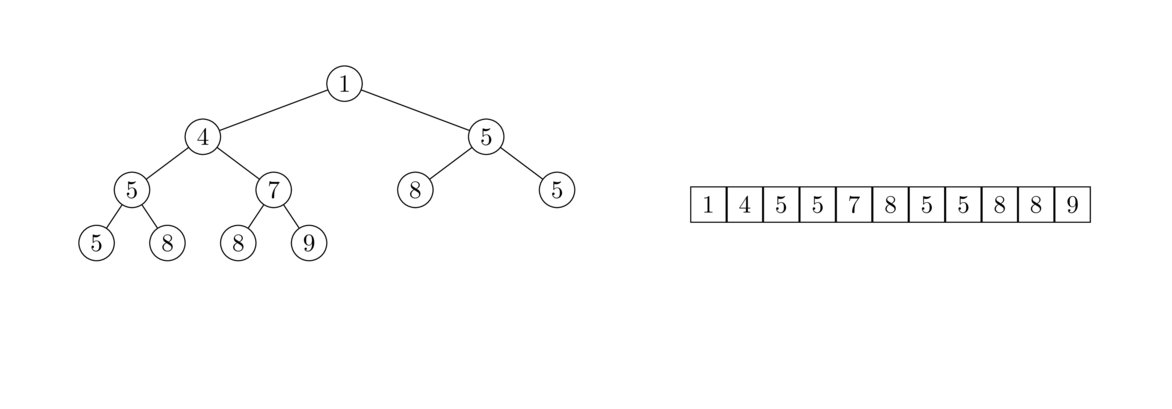

An efficient way to delete an item from the array is to slide everything to the right of it down by one index. As each item is being slid down, it overwrites the item in that place. For an illustration, see the figure below where the element 5 is being deleted. The algorithm starts by overwriting 5 with 7, then overwriting 7 with 8, and then overwriting 8 with 9. The last item in the list is not overwritten, but by decreasing numElements by 1, we make that index essentially inaccessible to the other methods because all of our methods never look beyond index numElements-1.

Here is how we would code it:

public void delete(int index) {

for (int i=index; i<numElements; i++)

data[i] = data[i+1];

numElements--;

}

delete method, except that we work through the array in the opposite order. Also, since we are adding to the list, we need to enlarge the array if we are out of room. The code is below:

public void insert(int index, int value) {

if (numElements >= capacity)

enlarge();

for (int i=numElements-1; i>=index; i--)

data[i+1] = data[i];

data[index] = value;

numElements++;

}

Running times

Looking back at the code we wrote, here are the big O running times of the methods.- The

addmethod is O(1) most of the time. Theenlargemethod is O(n) and every once in a while theaddmethod needs to call it.**Computer scientists would say the running time ofadd()is amortized O(1) because the cost of the slowenlarge()method is spread out over all of the adds. For instance, if we calladd()1000 times in a row, theenlarge()method will be called 7 times (when the list size reaches 10, 20, 40, 80, 160, 320, and 640), but those 7enlarge()calls, spread out over 1000 adds, average out to an O(1) running time. - The

sizeandisEmptymethods are O(1). Each of them consists of a single operation with no loop involved. In some implementations of a list data structure, thesizemethod would be O(n), where you would loop through the list and count how many items you see. However, since we are maintaining a variablenumElementsthat keeps track of the size, that counting is spread out over all the calls to theaddmethod. - The

getandsetmethods are O(1). Each of them consists of a single operation with no loop involved. - The

reversemethod is O(n). The firstreversemethod we wrote has a single loop that runs through the entire array, so it's O(n). The secondreversemethod loops through half of the array, so it's O(n/2), but since we ignore constants, we say it's O(n). Still in a practical sense, the second method is about twice as fast as the first. - The

contains,insert, anddeletemethods are all O(n). Remember that for big O, we are usually concerned with the worst case scenarios. For thecontainsmethod, this would be if the item is not in the list. For theinsertanddeletemethods, this would be for inserting/deleting at index 0. In all these cases, we have to loop through the entire array, so we get O(n).

Linked lists

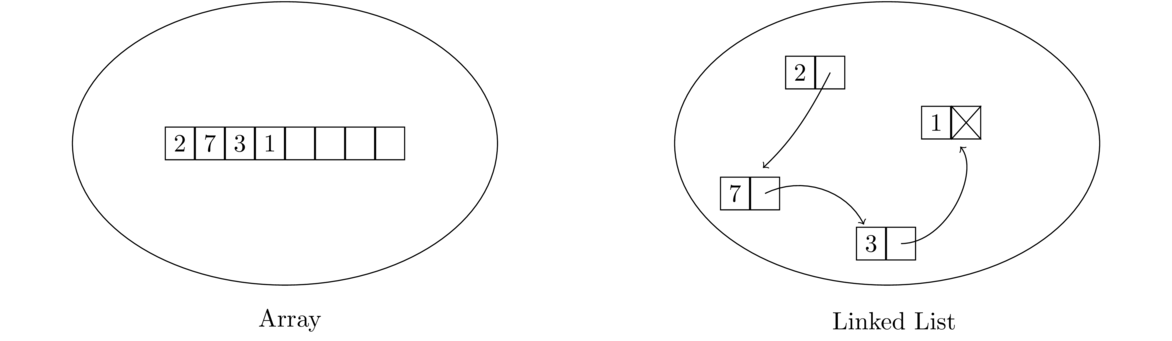

Linked lists provide a completely different approach to implementing a list data structure. With arrays, the elements are all stored next to each other, one after another, in memory. With a linked list, the elements can be spread out all over memory.

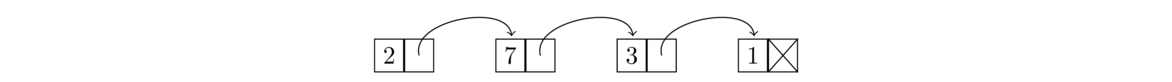

In particular, each element in a linked list is paired with a link that points to where the next element is stored in memory. So a linked list is just sort of strung along from one element to the next across memory, as in the figure below.

The linked list shown above is often drawn like this:

Each item in a linked list is called a node. Each node consists of a data value as well as a link to the next node in the list. The last node's link is null; in other words, it is a link going nowhere. This indicates the end of the list.

Implementing a linked list

Each element or node in the list is a pair of things: a data value and a link. In Java, to pair things like this, we create a class, which we will call Node. The data type of the value will be an integer. But what about the link's data type? It points to another Node object, so that's what its data type will be—a Node. So the class will contain references to itself, making it recursive in a sense. Here is the outline of the linked list class, with the Node class being a private inner class.

public class LList {

private class Node {

public int value;

public Node next;

public Node(int value, Node next) {

this.value = value;

this.next = next;

}

}

private Node front;

public LList() {

front = null;

}

}

The linked list class itself only has one class variable, a Node variable called front that keeps track of which node is at the front of the list. The Node class is made private since it is an internal implementation detail of the LList class, not something that users of our list need to worry about. The Node class is quite simple, having just the two class variables and a constructor that sets their values.**Note that those variables are public. This keeps the syntax a little simpler. In Java, the convention is usually to make things private. But here our goal is learn about data structures, and using private variable with getters and setters will complicate the code, making it harder to see the essential ideas of linked lists.

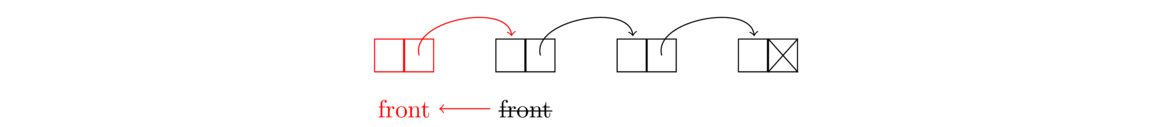

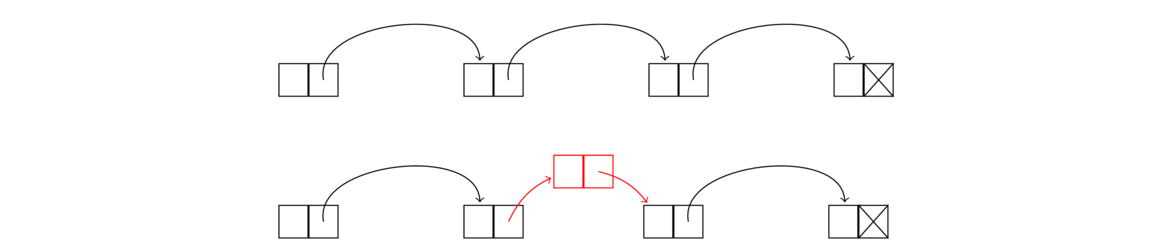

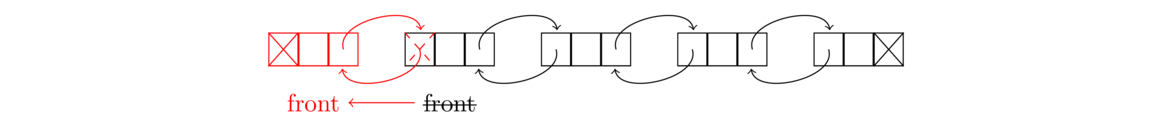

Let's look first at adding to the front of a linked list. To do that, we need to do three things: create a new node, point that new node to the old front of the list, and then make the new node into the new front of the list. See the figure below.

It often helps to draw figures like this first and use them to create the linked list code. Here is code that does this process:.

Node node = new Node(value, null) node.next = front; front = node;

Working with linked lists often involves creating new nodes and moving links around. Below is an imaginative view of what memory might look like, with a linked list before and after adding a new item at the front.

We see that we have to first create the new node in memory. As we're creating that node, we specify what it will point to, and after that we have to move the front marker from its old location so that it's now indicating the new node is the front.

The three lines of code can actually be done in a single line, like below:

public void addToFront(int value) {

front = new Node(value, front);

}

Since we don't have a pointer to the back of the list, like we have one pointing to the front of the list, adding to the back is a bit more work. We have to walk the list item-by-item to get to the back and then create and add a new node there. The loop that we use to walk the list has the following form:

Node node = front;

while (node.next != null)

node = node.next;

This loop is one that is very useful for looping through linked lists. The idea of it is that we create a Node variable called node that acts as a loop variable, similar to how the variable i is used in a typical for loop. This Node variable starts at the beginning of the list via the line node = front. Then the line node = node.next moves the node variable through the list one node at a time. The loop condition node.next != null stops us just short of the last variable. A lot of times we will want to use node != null in its place to make sure we get through the whole list. In both cases, remember that the way we know when to stop the loop is that the end of the list is marked by a null link.

Here is the complete add method:

public void add(int value) {

if (front == null)

front = new Node(value, null);

else {

Node node = front;

while (node.next != null)

node = node.next;

node.next = new Node(value, null);

}

}

Note the special case at the start. Checking if front==null checks if the list is empty. In particular, when adding to an empty list, not only do we have to create a new node, but we also need to set the front variable to point to it. If we leave this code out, we would get a very common linked list error message: a null pointer exception. What would happen is if the list is empty, then node=front will set node to null. Then when we try to check node.next, we are trying to get a field from something that has no fields (it is null). That gives a null pointer exception.

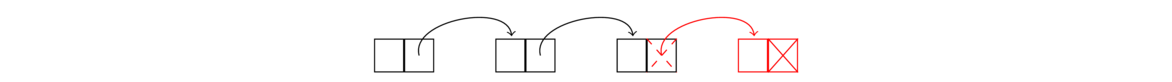

The last line of code of the method creates the new node and points the old last node of the list to this new node. This is shown in the figure below.

Note also that the code that creates a new node sets its link to null. This is because the end of a list is indicated by a null link, and the node we are creating is to go at the end of the list.

toString()toString method for dynamic arrays except that in place of the for loop that is used for dynamic arrays, we use a linked list while loop. Here is the code:

@Override

public String toString() {

if (front == null)

return "[]";

String s = "[";

Node node = front;

while (node.next != null) {

s += node.value + ", ";

node = node.next;

}

s += node.value + "]";

return s;

}

Now we can test out our code to make sure it is working:

public static void main(String[] args) {

LList list = new LList();

list.add(1);

list.add(2);

System.out.println(list);

}

It's worth stopping here to take stock of what we have done. Namely, we have managed to create a list essentially from scratch, without using arrays, where the array-like behavior is simulated by linking the items together.

size and isEmpty

public int size() {

int count = 0;

Node node = front;

while (node != null) {

count++;

node = node.next;

}

return count;

}

To check if a list is empty, we need only check if the front variable points to something or if it is null. This can be done very quickly, like below:

public boolean isEmpty() {

return front == null;

}

public int get(int index) {

int count = 0;

Node node = front;

while (count < index) {

count++;

node = node.next;

}

return node.value;

}

public void set(int index, int value) {

int count = 0;

Node node = front;

while (count < index) {

count++;

node = node.next;

}

node.value = value;

}

To delete a node, we reroute the link of the node immediately before it as below (the middle node is being deleted).

To do this, we step through the list node-by-node, using a loop similar to the ones for the get and set methods. Once the loop variable node reaches the node immediately before the one being deleted, we use the following line to perform the deletion:

node.next = node.next.next;

This line reroute's node's link around the deleted node so it points to the node immediately after the deleted node. That deleted node is still sitting there in memory, but with nothing now pointing to it, it is no longer part of the list. Eventually Java's garbage collection algorithm will come around and allow that part of memory to be reused for other purposes.

The full delete code is below. Note that the line above will not work for deleting the first index, so we need a special case for that. To handle it, it suffices to do front = front.next, which moves the front variable to point to the second thing in the list, making that the new front.

public void delete(int index) {

if (index == 0)

front = front.next;

else {

int count = 0;

Node node = front;

while (count < index-1) {

count++;

node = node.next;

}

node.next = node.next.next;

}

}

Note also that we don't need a special case for deleting the last thing in the list. Try to see why the code will work just fine in that case.

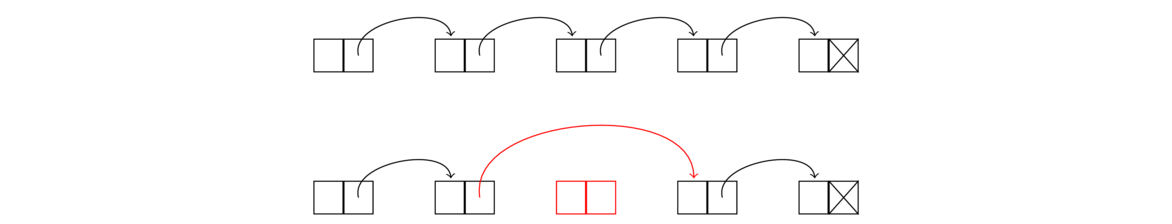

insert method:

We can see that to insert a node, we go the node immediately before index we need. Let's call it node. We point node's link to a new node that we create, and make sure the new node's link points to what the node used to point to. The node creation and rerouting of links can be done in one line, like this:

node.next = new Node(value, node.next);

Much of the code for inserting into a list is similar to the delete method. In particular, we need a special case for inserting at the beginning of the list (where we can simply call the addToFront method that we already wrote), and the code that loops to get to the insertion point is the same as the delete method's code. Here is the method:

public void insert(int index, int value) {

if (index == 0)

addToFront(value);

else {

int count = 0;

Node node = front;

while (count < index-1) {

count++;

node = node.next;

}

node.next = new Node(value, node.next);

}

}

Working with linked lists

The best way to get comfortable with linked lists is to write some linked list code. Here are a few helpful hints for working with linked lists.

Basics

First, remember the basic idea of a linked list, that each item in the list is a node that contains a data value and a link to the next node in the list. Creating a new node is done with a line like below:

Node node = new Node(19, null);

This creates a node with value 19 and a link that points to nothing. Suppose we want to create a new node that points to another node in the list called node2. Then we would do the following:

Node node = new Node(19, node2);

To change the link of node to point to something else, say the front of the list, we could do the following:

node.next = front;

Remember that the front marker is a Node variable that keeps track of which node is at the front of the list. The line below moves the front marker one spot forward in the list (essentially deleting the first element of the list):

front = front.next;

Looping

To move through a linked list, a loop like below is used:

Node node = front;

while (node != null)

node = node.next;

This will loop through the entire list. If we use node.next != null, that will stop us at the last element instead of moving through the whole list. If we want to stop at a certain index in the list, we can use a loop like the one below (looping to index 10):

Node node = front;

int count = 0;

while (count < 10) {

count++;

node = node.next;

}

Using pictures

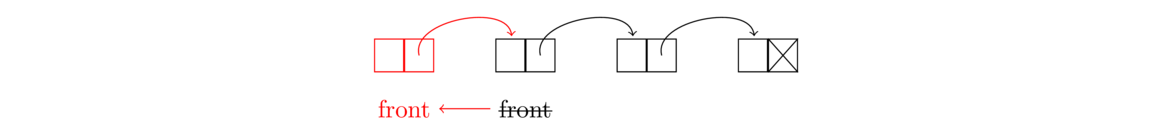

Many linked list methods just involve creating some new nodes and moving some links around. To keep everything straight, it can be helpful to draw a picture. For instance, below is the picture we used earlier when describing how to add a new node to the front of a list:

And we translated this picture into the following line:

front = new Node(value, front);

This line does a few things. It creates a new node, points that node to the old front of the list, and moves the front marker to be at this new node.

Edge cases

When working with linked lists, as with many other things, it is important to worry about edge cases. These are special cases like an empty list, a list with one element, deleting the first or last element in a list, etc. Often with linked list code, one case will handle almost everything except for one or two special edge cases that require their own code.

For instance, as we saw earlier, to delete a node from a list, we loop through the list until we get to the node right before the node to be deleted and reroute that node's link around the deleted node. But this won't work if we are deleting the first element of the list. So that's an edge case, and we need a special case to handle that.

Null pointer exceptions

When working with nodes, there is a good chance of making a mistake and getting a null pointer exception from Java. This usually happens if you use an object without first initializing it. For example, the following will cause a null pointer exception:

Node node;

// some code not involving node might go here...

if (node.value == 3)

// some more code goes here...

Since the node variable was not initialized to anything, its current value is the special Java value, null. When we try to access node.value, we get a null pointer exception because node is null and has no field called value.

The correction is to either set node equal to some other Node object or initialize it like below:

Node node = new Node(42, null);

In summary, if you get a null pointer exception, it often indicates an object that has been declared but not initialized. It also frequently happens with a linked list loop that accidentally loops past the end of the list (which is indicated by null).

A couple of trickier examples

[1,2,3], after calling this method it will be [1,1,2,2,3,3]. Here is the code:

public void doubler() {

Node node = front;

while (node != null) {

node.next = new Node(node.value, node.next);

node = node.next.next;

}

}

We do this with a single loop. At each step of the loop, we make a copy of the current node and point the current node to the copy. Then we move to the next node. But we can't just do node = node.next because that will move from the current node to its copy, and we will end up with an infinite loop that keeps copying the same value over and over. Instead, node = node.next.next moves us forward by two elements in the list.

Note that we could do this method by looping through the list and calling the insert method. However, that would be an O(n2) approach as the insert method itself uses a loop to get to the appropriate locations. That of course is wasteful since we are already at the appropriate location. The approach given above is O(n).

delete method, but that would be an O(n2) approach. Here is an O(n) approach:

public void removeZeros() {

while (front != null && front.value == 0)

front = front.next;

Node node = front;

while (node != null && node.next != null) {

while (node.next != null && node.next.value == 0)

node.next = node.next.next;

node = node.next;

}

}

Writing this requires some real care. First, dealing with zeroes at the start of the list is different from dealing with them in the middle. To delete a zero at the front of the array, we can use front = front.next. However, it may happen that the next element is also zero and maybe the one after that, so we use a loop. We also need to be careful that the list is not all zeroes, as we can easily end up with a null pointer exception that way. The first two lines of the method take care of all of this.

The next few lines handle zeroes in the middle of the array. We can delete these zeroes by rerouting links in the same way that the delete method does. But we have to be careful about multiple zeroes in a row. To handle this, every time we see a zero, we loop until we stop seeing zeroes or reach the end of the list. Note that despite the nested loops, they both affect the loop variable node, so overall this is still an O(n) algorithm.

To test this method out, try it with a variety of cases including lists of all zeroes, lists that start with multiple zeroes, lists that end with multiple zeroes, and various other combinations.

More about linked lists

Let's look at the running times of our linked list methods.

- The

addToFrontmethod is O(1) as it only involves creating a new node and reassigning thefrontvariable. - The

addmethod, the way we wrote it, is O(n) since we loop all the way to the end of the list before creating and linking the new node. We could turn this into an O(1) method if we maintain abackvariable, analogous to thefrontvariable, that keeps track of where the back of the list is. Doing that requires a little more care in some of the other methods. For instance, in thedeletemethod, we would now need a special case to handle deleting the last item in the list, as thebackvariable would need to change. - The

sizemethod is O(n) because we loop through the entire list to count how many items there are. We could make it O(1) by adding anumElementsvariable to the class, similar to what we have with our dynamic array class. Every time a node is added or removed, we would update that variable. Thesizemethod would then just have to return the value of that variable. - The

isEmptymethod is O(1) since it's just a simple check to see iffrontis null. - Getting and setting elements at specific indices (sometimes called random accesses) are O(n) operations because we need to step through the list item by item to get to the desired index. This is one place where dynamic arrays are better than linked lists, as getting and setting elements in a dynamic array are O(1) operations.

- The

insertanddeletemethods are O(n). The reason is that we have to loop through the list to get to the insertion/deletion point. However, once there, the insertion and deletion operations are quick. This is in contrast to dynamic arrays, where getting to the insertion/deletion point is quick, but the actual insertion/deletion is not so quick because all the elements after the insertion/deletion point need to be moved.

Comparing dynamic arrays and linked lists

Dynamic arrays are probably the better choice in most cases. One of the big advantages of dynamic arrays has to do with cache performance. Not all RAM is created equal. RAM access can be slow, so modern processors include a small amount cache memory that is faster to access than regular RAM. Arrays, occupying contiguous chunks of memory, can be easily moved into the cache all at once. Linked list nodes, which are spread out through memory, can't be moved into the cache as easily.

Dynamic arrays are also better when a lot of random access (accessing things in the middle of the list) is required. This is because getting and setting elements is O(1) for dynamic arrays, while it is O(n) for linked lists.

In terms of memory usage, either type of list is fine for most applications. For really large lists, remember that linked lists require extra memory for all the links, an extra five or six bytes per list element. Dynamic arrays have their own potential problems in that not every space allocated for the array is used, and for really large arrays, finding a contiguous block of memory could be tricky, especially if memory has become fragmented (as chunks of memory are allocated and freed, memory can start to look like Swiss cheese).

Despite the fact that dynamic arrays are the better choice for most applications, it is still worth learning linked lists, as they are a fundamental topic in computer science that every computer science student is expected to know. They are popular in job interview questions, and the ideas behind them are useful in many contexts. For instance, some file systems use linked lists to store the blocks of the file spread out over a disk drive.

Doubly- and circularly-linked lists

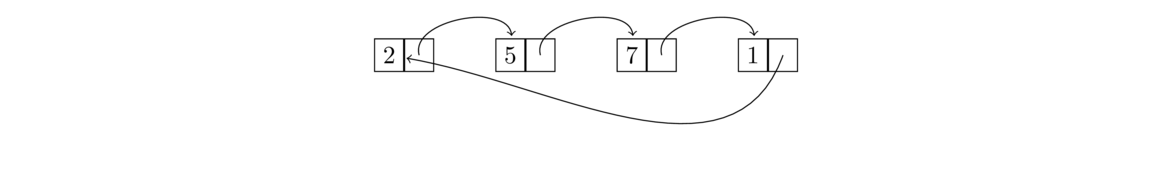

There are a couple of useful variants on linked lists. The first is a circularly-linked list is one where the link of the last node in the list points back to the start of the list, as shown below.

Working with circularly-linked lists is similar to working with ordinary ones except that there's no null link at the end of the list (you might say the list technically has no start and no end, just being a continuous loop). One thing to do if you need to access the “end” of the list is to loop until node.next equals front. A key advantage of a circularly-linked list is that you can loop over its elements continuously.

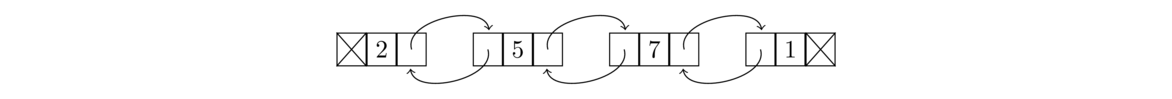

The second variant of a linked list is a doubly-linked list, where each node has two links, one to the next node and one to the previous node, as shown below:

Having two links can be useful for methods that need to know the predecessor of a node or for traversing the list in reverse order. Doubly-linked lists are a little more complex to implement because we now have to keep track of two links for each node. To keep track of all the links, it can be helpful to sketch things out. For instance, here is a sketch useful for adding a node to the front of a doubly-linked list.

We see from the sketch that we need to create a new node whose next link points to the old front and whose previous link is null. We also have to set the previous link of the old front to point to this new node, and then set the front marker to the new node. A special case is needed if the list is empty.

Making the linked list class generic

The linked list class we created works only with integer data. We could modify it to work with strings by going through and replacing the int declarations with String declarations, but that would be tedious. And then if we wanted to modify it to work with doubles or longs or some object, we would have to do the same tedious replacements all over again. It would be nice if we could modify the class once so that it works with any type. This is where Java's generics come in.

The process of making a class work with generics is pretty straightforward. We will add a little syntax to the class to indicate we are using generics and then replace the int declarations with T declarations. The name T, short for “type”, acts as a placeholder for a generic type. (You can use other names instead of T, but the T is somewhat standard.)

First, we have to introduce the generic type in the class declaration:

public class GLList<T>

The slanted brackets are used to indicate a generic type. After that, the main thing we have to do is anywhere we refer to the data type of the values stored in the list, we have to change it from int to T. For example, the get method changes as shown below:

public int get(int index) { public T get(int index) {

int count = 0; int count = 0;

Node node = front; Node node = front;

while (count < index) { while (count < index) {

count++; count++;

node = node.next; node = node.next;

} }

return node.value; return node.value;

} }

Notice that the return type of the method is now type T instead of int. Notice also that index stays an int. Indices are still integers, so they don't change. It's only the data type stored in the list that changes to type T.

One other small change is that when comparing generic types, using == doesn't work anymore, so we have to replace that with a call to .equals, just like when comparing strings in Java.

Here is some code that shows how to create an object from the generic class (which we'll call GLList)

GLList<String> list = new GLList<String>();

Lists in the Java Collections Framework

While it is instructive to write our own list classes, it is best to use the ones provided by Java for most practical applications. Java's list classes have been extensively tested and optimized, whereas ours are mostly designed to help us understand how the two types of lists work. On the other hand, it's nice to know that if we need something slightly different from what Java provides, we could code it.

Java's Collections Framework contains, among many other things, an interface called List. There are two classes implementing it: a dynamic array class called ArrayList and a linked list class called LinkedList.

Here are some sample declarations:

List<Integer> list = new ArrayList<Integer>(); List<Integer> list = new LinkedList<Integer>();

The Integer in the slanted braces indicates the data type that the list will hold. This is an example of Java generics. We can put any class name here. Here are some examples of other types of lists we could have:

List<String>— list of strings

List<Double>— list of doubles

List<Card>— list ofCardobjects (assuming we've created aCardclass)

List<List<Integer>>— list of integer lists

The data type always has to be a class. In particular, since int is a primitive data type and not a class, we need to use Integer, which is the class version of int, in the declaration. Here are a few lines of code demonstrating how to work with lists in Java:

// Create list, add to list, print list

List<Integer> list = new ArrayList<Integer>();

list.add(2);

list.add(3);

System.out.println(list);

// change item at index 1 to equal 99

list.set(1, 99);

// Nice way to add several things at once

Collections.addAll(list, 4, 5, 6, 7, 8, 9, 10);

// Randomly reorder the things in the list

Collections.shuffle(list);

// Loop through list

for (int i=0; i<list.size(); i++)

System.out.println(list.get(i));

// Loop through list using Java's foreach loop

for (int x : list)

System.out.println(x);

Note: Java has two list classes built in: one from java.util and one from java.awt. You want the one from java.util. If you find yourself getting weird list errors, check to make sure the right list type is imported.

List methods

Here are the most useful methods of the List interface. They are available to both ArrayLists and LinkedLists.

| Method | Description |

|---|---|

add(x) | adds x to the list |

add(i, x) | adds x to the list at index i |

contains(x) | returns whether x is in the list |

equals(list2) | returns whether the list equals list2 |

get(i) | returns the value at index i |

indexOf(x) | returns the first index (location) of x in the list |

isEmpty() | returns whether the list is empty |

lastIndexOf(x) | like indexOf, but returns the last index |

remove(i) | removes the item at index i |

remove(x) | removes the first occurrence of the object x |

removeAll(x) | removes all occurrences of x |

set(i, x) | sets the value at index i to x |

size() | returns the number of elements in the list |

subList(i,j) | returns a slice of a list from index i to j-1, sort of like substring

|

The constructor itself is also useful for making a copy of a list. See below:

List<Integer> list2 = new ArrayList<Integer>(list);

Note that setting list2 = list would not have the same effect. It would just create an alias, where list and list2 would be two names for the same object in memory. The code above, however, creates a brand new list, so that list and list2 reference totally different lists in memory. Forgetting this is a really common source of bugs.

Methods of java.util.Collections

Here are some useful static Collections methods that operate on lists (and, in some cases, other Collections objects that we will see later):

| Method | Description |

|---|---|

addAll(list, x1,..., xn) | adds x1, x2, …, xn to the list a |

binarySearch(list, x) | returns whether x is in list using a binary search |

frequency(list, x) | returns a count of how many times x occurs in list |

max(list) | returns the largest element in list |

min(list) | returns the smallest element in list |

replaceAll(list, x, y) | replaces all occurrences of x in list with y |

reverse(list) | reverses the elements of list |

shuffle(list) | puts the contents of list in a random order |

sort(list) | sorts list |

A couple of examples

To demonstrate how to work with lists, here is some code that builds a list of the dates of the year and then prints random dates. Notice that we make use of lists and loops to do this instead of copy-pasting 365 dates.

List<String> months = new ArrayList<String>();

List<Integer> monthDays = new ArrayList<Integer>();

List<String> dates = new ArrayList<String>();

Collections.addAll(months, "Jan", "Feb", "Mar", "Apr",

"May", "Jun", "Jul", "Aug", "Sep", "Oct", "Nov", "Dec");

Collections.addAll(monthDays, 31, 28, 31, 30, 31, 30, 31, 31, 30, 31, 30, 31);

for (int i=0; i<12; i++)

for (int j=1; j<monthDays.get(i)+1; j++)

dates.add(months.get(i) + " " + j);

// print out 10 random dates where repeats could possibly happen

Random random = new Random();

for (int i=0; i<10; i++)

System.out.println(dates.get(random.nextInt(dates.size())));

// print out 10 random dates where we don't want repeats

Collections.shuffle(dates);

for (int i=0; i<10; i++)

System.out.println(dates.get(i));

Here is another example that reads from a wordlist file and prints a few different things about the words in the file. The word list file is assumed to have one word on each line. Wordlists of common English words are easy to find on the internet and are particularly useful.

List<String> words = new ArrayList<String>();

Scanner scanner = new Scanner(new File("wordlist.txt"));

while (scanner.hasNext())

words.add(scanner.nextLine());

System.out.println(words.get(2806)); // print a word from the middle of the list

System.out.println(words.size()); // print how many words are in the list

System.out.println(words.get(words.size()-1)); // print the last word in the list.

// Find the longest word in the list

String longest = "";

for (String word : words)

if (word.length() > longest.length())

longest = word;

System.out.println(longest);

As one further example, consider the following problem:

Write a program that asks the user to enter a length in feet. The program should then give the user the option to convert from feet into inches, yards, miles, millimeters, centimeters, meters, or kilometers. Say if the user enters a

1, then the program converts to inches, if they enter a2, then the program converts to yards, etc.

While this could certainly be written using if statements or a switch statement, if there are a lot of conversions to make, the code will be long and messy. The code can be made short and elegant by storing all the conversion factors in a list. This would also make it easy to add new conversions as we would just have to add some values to the list, as opposed to copying and pasting chunks of code. Moreover, the conversion factors could be put into a file and read into the list, meaning that the program itself need not be modified at all. This way, our users (who may not be programmers) could easily add conversions.

This is of course a really simple example, but it hopefully demonstrates the main point—that lists can eliminate repetitious code and make programs easier to maintain.

Stacks and Queues

Introduction

This chapter is about two of the simplest and most useful data structures—stacks and queues. Stacks and queues behave like lists of items, each with its own rules for how items are added and removed.

Stacks

A stack is a LIFO structure, short for last in, first out. Think of a stack of dishes, where the last dish placed on the stack is the first one to be taken off. Or as another example, think of a discard pile in a game of cards where the last card put on the pile is the first one to be picked up. Stacks have two main operations: push and pop. The push operation puts a new element on the top of the stack and the pop element removes whatever is on the top of the stack and returns it to the caller.

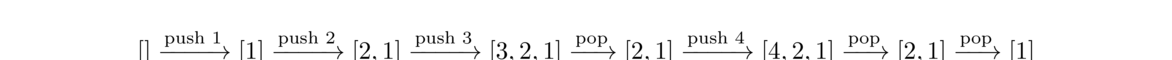

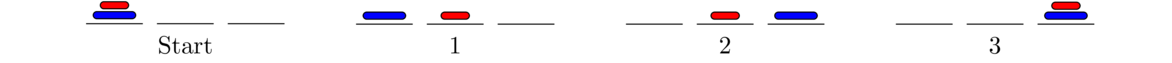

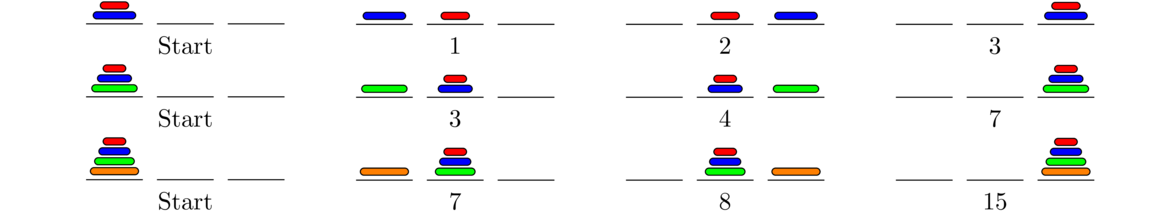

Below is an example of a few stack operations starting from an empty stack. The top of the stack is at the left.

Queues

A queue is a FIFO structure, short for first in, first out. A queue is like a line (or queue) at a store. The first person in line is the first person to check out. If a line at a store were implemented as a stack, on the other hand, the last person in line would be the first to check out, which would lead to lots of angry customers. Queues have two main operations, add and remove. The add operation adds a new element to the back of the queue, and the remove operation removes the element at the front of the queue and returns it to the caller.

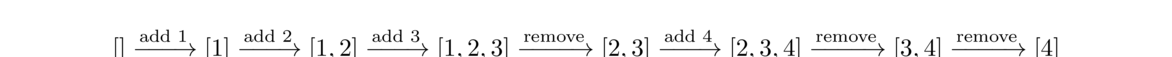

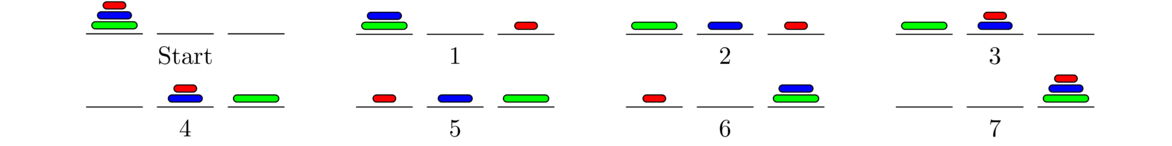

Here is an example of some queue operations, where the front of the queue is at the left.

Deques

There is one other data structure, called a deque, that we will mention briefly. It behaves like a list that supports adding and removing elements on either end. The name deque is short for double-ended queue. Since it supports addition and deletion at both ends, it can also be used as a stack or a queue.

Implementing a stack

Before we look at applications of stacks and queues, let's look at how they can be created from scratch. While we can implement stacks and queues using arrays, we will instead use linked lists, in part to give us more practice with them. However, it is a good exercise to try implementing both data structures with arrays.**Queues, in particular, can be implemented using something called a circular array.

Below is a working stack class:

public class LLStack<T> {

public class Node {

public T value;

public Node next;

public Node(T value, Node next) {

this.value = value;

this.next = next;

}

}

private Node top;

public LLStack() {

top = null;

}

public void push(T value) {

top = new Node(value, top);

}

public T pop() {

T returnValue = top.value;

top = top.next;

return returnValue;

}

}

This not much different from our linked list class. The Node class is the same, the variable top is the same as the linked list class's front variable, and the push method is the same as the addToFront method. The only new thing is the pop method, which removes the top of the stack via the line top = top.next and also returns the value stored there.

Notice that the push and pop methods both have an O(1) running time.

Implementing a queue

Implementing a queue efficiently takes a bit more work than a stack, but not too much more. Here it is:

public class LLQueue<T> {

private class Node {

public T value;

public Node next;

public Node(T value, Node next) {

this.value = value;

this.next = next;

}

}

Node front;

Node back;

public LLQueue() {

front = back = null;

}

public void add(T value) {

Node newNode = new Node(value, null);

if (back != null)

back = back.next = newNode;

else

front = back = newNode;

}

public T remove() {

T save = front.value;

front = front.next;

if (front == null)

back = null;

return save;

}

}

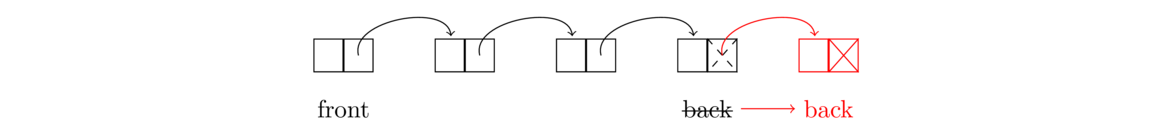

With a stack, pushing and popping happen both at the first item in the list. With a queue, removing items happens at the first item, but adding happens at the end of the list. This means that our queue's remove method is mostly the same as our stack's pop method, but to make it so the add method is O(1), we add a back variable that keeps track of what node is in the back. Keeping track of that back variable adds a few complications, as we need to be extra careful when the queue empties out. See the figure below for what is going on with the add method:

Note that both the add and remove methods are O(1).

Stacks, queues, and deques in the Collections Framework

The Collections Framework supports all three of these data structures. The Collections Framework does contain a Stack interface, but it is old and flawed and only kept around to avoid breaking old code. The Java documentation recommends against using it. Instead, it recommends using the Deque interface, which is usually implemented by the ArrayDeque class. That class can also implement queues, so we will use it to do so. Here is some code showing how things work:

Deque<Integer> stack = new ArrayDeque<Integer>(); Deque<Integer> queue = new ArrayDeque<Integer>(); stack.push(3); System.out.println(stack.pop()); queue.add(3); System.out.println(queue.remove());

The <Deque> interface also contains a method called element, which can be used to view the top/front of the stack/queue without removing it. It also contains size and isEmpty methods, and more. In particular, a deque, or double-ended queue, allows for O(1) additions and removals at both the front and back. The methods for these are addFirst, addLast, removeFirst, and removeLast. The stack and queue methods push, pop, etc. are synonyms for certain of these.

Applications

Stacks and queues have many applications. Here are a few.

- Stacks are useful for implementing undo/redo functionality, as well as for the back/forward buttons in a web browser. For undo/redo, as actions are undone, they are put onto a stack. To redo the actions, we pop things from that stack, so the most recently undone action is the one that gets redone.

- For card games, stacks are useful for representing a discard pile, where the card most recently placed on the pile is the first one to be picked back up. Queues are useful for the game War, where players play the top card from their hand and cards that are won are placed on the bottom of the hand.

- Stacks can be used to reverse a list. Here is a little code to demonstrate that:

public static void reverse(List<Integer> list) { Deque<Integer> stack = new ArrayDeque<Integer>(); for (int x : list) stack.push(x); for (int i=0; i<list.size(); i++) list.set(i, stack.pop()); } - A common algorithm using stacks is for checking if parentheses are balanced. To be balanced, each opening parenthesis must have a matching closing parenthesis and any other parentheses that come between the two must themselves be opened and closed before the outer group is closed. For instance, the parentheses in the expression (2*[3+4*{5+6}]) are balanced, but those in (2+3*(4+5) and 2+(3*[4+5)] are not. A simple stack-based algorithm for this is as follows: Loop through the expression. Every time an opening parenthesis is encountered, push it onto the stack. Every time a closing parenthesis is encountered, pop the top element from the stack and compare it with the closing parenthesis. If they match, then things are still okay. If they don't match, then the expression is trying to close one type of parenthesis with another type, indicating a problem. If the stack is empty when we try to pop, that also indicates a problem, as we have a closing parenthesis with no corresponding opening parenthesis. Finally, if the stack is not empty after we are done reading through the expression, that also indicates a problem, namely an opening parenthesis with no corresponding closing parenthesis.

- Stacks are useful for parsing formulas. Suppose, for example, we need to write a program to evaluate user-entered expressions like 2*(3+4)+5*6. One technique is to convert the expression into what is called postfix notation (or Reverse Polish notation) and then use a stack. In postfix, an expression is written with its operands first, followed by the operators. Ordinary notation, with the operator between its operands, is called infix notation.

For example, 1+2 in infix becomes 1 2 + in postfix. The expression 1+2+3 becomes 1 2 3 + +, and the expression (3+4)*(5+6) becomes 3 4 + 5 6 + *.

To evaluate a postfix expression, we use a stack of operands and results. We move through the formula left to right. Every time we meet an operand, we push it onto the stack. Every time we meet an operator, we pop the top two items from the stack, apply the operand to them, and push the result back onto the stack.

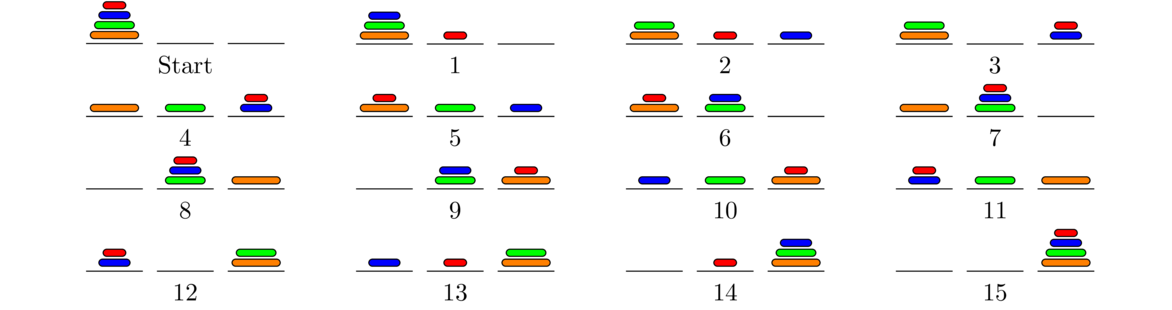

For example, let's evaluate (3+4)*(5+6), which is 3 4 + 5 6 + * in postfix. Here is the sequence of operations.

The result is 77, the only thing left on the stack at the end. Postfix notation has some benefits over infix notation in that parentheses are never needed and it is straightforward to write a program to evaluate postfix expressions. In fact, many calculators of the '70s and '80s used postfix for just this reason. Calculator users would enter their formulas using postfix notation. To convert from infix to postfix there are several algorithms. A popular one is Dijkstra's Shunting Yard Algorithm. It uses stacks as a key part of what it does.3 push it onto stack. Stack = [3]4 push it onto stack. Stack = [4, 3]+ pop 4 and 3 from stack, compute 3+4=7, push 7 onto stack. Stack = [7]5 push it onto stack. Stack = [5, 7]6 push it onto stack. Stack = [6, 5, 7]+ pop 6 and 5 from stack, compute 5+6=11, push 11 onto stack. Stack = [11, 7]* pop 11 and 7 from stack, compute 7*11=77, push 77 onto stack Stack = [77] - Queues are used in operating systems for scheduling tasks. Often, if the system is busy doing something, tasks will pile up waiting to be executed. They are usually done in the order in which they are received, and a queue is an appropriate data structure to handle this.

- Most programming languages use a call stack to handle function calls. When a program makes a function call, it needs to store information about where in the program to return to after the function is done as well as reserve some memory for local variables. The call stack is used for these things. To see why a stack is used, suppose the main program calls function A which in turn calls function B. Once B is done, we need to return to A, which was the most recent function called before B. Since we always need to go back to the most recent function, a stack is appropriate. The call stack is important for recursion, as we will see in Chapter 4.

- Breadth-first search/Depth-first search — These are useful searching algorithms that are discussed in Section 10.4. They can be implemented with nearly the same algorithm, the only major change being that breadth-first search uses a queue, whereas depth-first search uses a stack.

In general, queues are useful when things must be processed in the order in which they were received, and stacks are useful if things must be processed in the opposite order, where the most recent thing must be processed first.

Recursion

Introduction

Informally, recursion is the process where a function calls itself. More than that, it is a process of solving problems where you use smaller versions of the problem to solver larger versions of the problem. Though recursion takes a little getting used to, it can often provide simple and elegant solutions to problems.

Here is a recursive function to reverse a string:

public static String reverse(String s) {

if (s.equals(""))

return "";

return reverse(s.substring(1)) + s.charAt(0);

}

Notice how the function calls itself in the last line. To understand how the function works, consider the string abcde. Pull off the first letter to break the string into a and bcde. If we reverse bcde and add a to the end of it, we will have reversed the string. That is what the following line does:

return reverse(s.substring(1)) + s.charAt(0);

The key here is that bcde is smaller than the original string. The function gets called on this smaller string and it reverses that string by breaking off b from the front and reversing cde. Then cde is reversed in a similar way. We continue breaking the string down until we can't break it down any further, as shown below:

reverse("abcde") = reverse("bcde") + "a"

reverse("bcde") = reverse("cde") + "b"

reverse("cde") = reverse("de") + "c"

reverse("de") = reverse("e") + "d"

reverse("e") = reverse("") + "e"

reverse("") = ""

Building back up from the bottom, we go from "" to "e" to "ed" to "edc", etc. All of this is encapsulated in the single line of code shown below again, where the reverse function keeps calling itself:

return reverse(s.substring(1)) + s.charAt(0);

We stop the recursive process when we get to the empty string "". This is our base case. We need it to prevent the recursion from continuing forever. In the function it corresponds to the following two lines:

if (s.equals(""))

return "";

Another way to think of this is as a series of nested function calls, like this:

reverse("abcde") = reverse(reverse(reverse(reverse(reverse("")+"e")+"d")+"c")+"b")+"a";

When I sit down to write something recursively, I have all of the above in the back of my mind, but trying to think about that while writing the code makes things harder. Instead, I ask myself the following:

- How can I solve the problem in terms of smaller cases of itself?

- How will I stop the recursion? (In other words, what are the small cases that don't need recursion?)

One of the most useful breakdowns for a string is to break it into its head (first character) and tail (everything else). The head in Java is s.charAt(0), and the tail is s.substring(1).